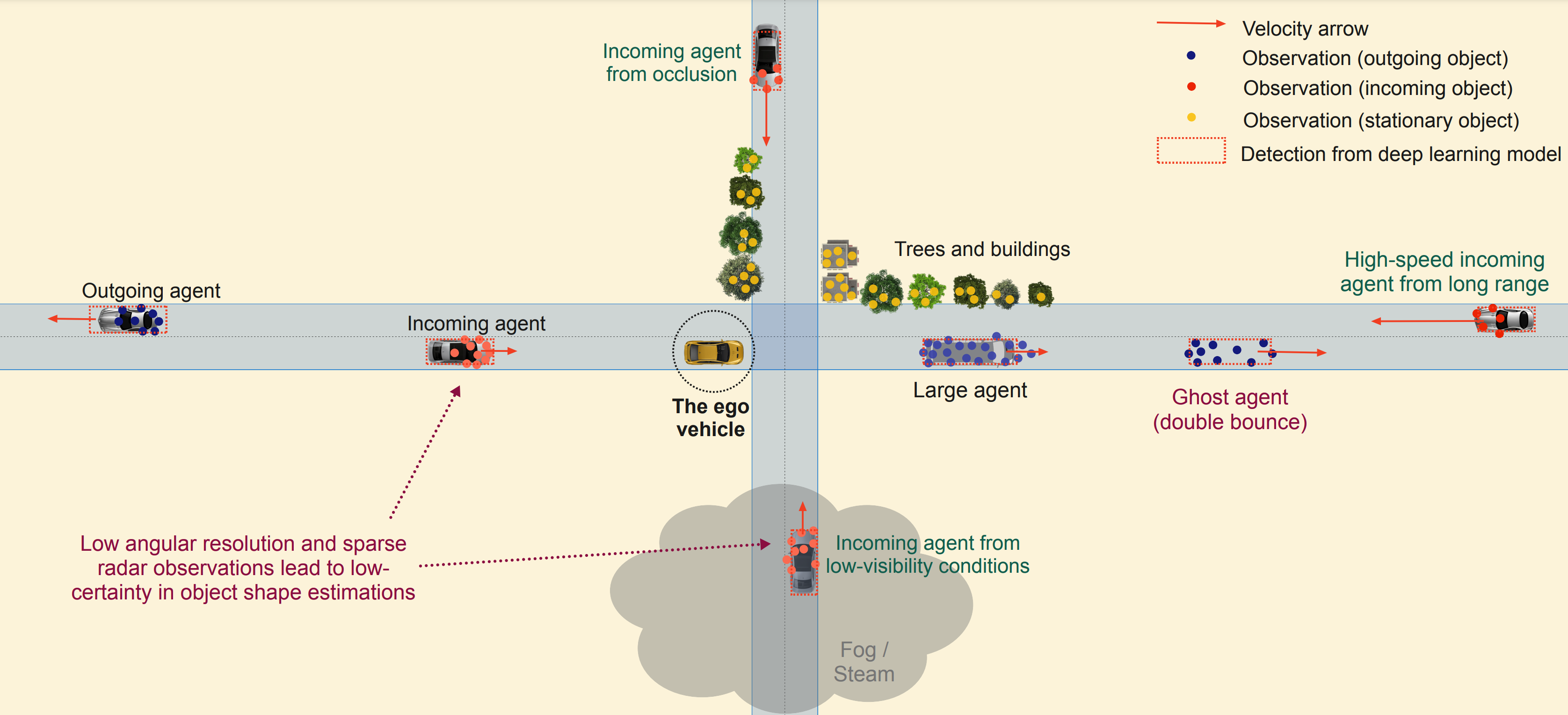

Radar is a key component of the suite of perception sensors used for safe and reliable navigation of autonomous vehicles. Its unique capabilities include high-resolution velocity imaging, detection of agents in occlusion and over long ranges, and robust performance in adverse weather conditions. However, the usage of radar data presents some challenges: it is characterized by low resolution, sparsity, clutter, high uncertainty, and lack of good datasets. These challenges have limited radar deep learning research. As a result, current radar models are often influenced by lidar and vision models, which are focused on optical features that are relatively weak in radar data, thus resulting in under-utilization of radar’s capabilities and diminishing its contribution to autonomous perception. This review seeks to encourage further deep learning research on autonomous radar data by 1) identifying key research themes, and 2) offering a comprehensive overview of current opportunities and challenges in the field. Topics covered include early and late fusion, occupancy flow estimation, uncertainty modeling, and multipath detection. The paper also discusses radar fundamentals and data representation, presents a curated list of recent radar datasets, and reviews state-of-the-art lidar and vision models relevant for radar research.

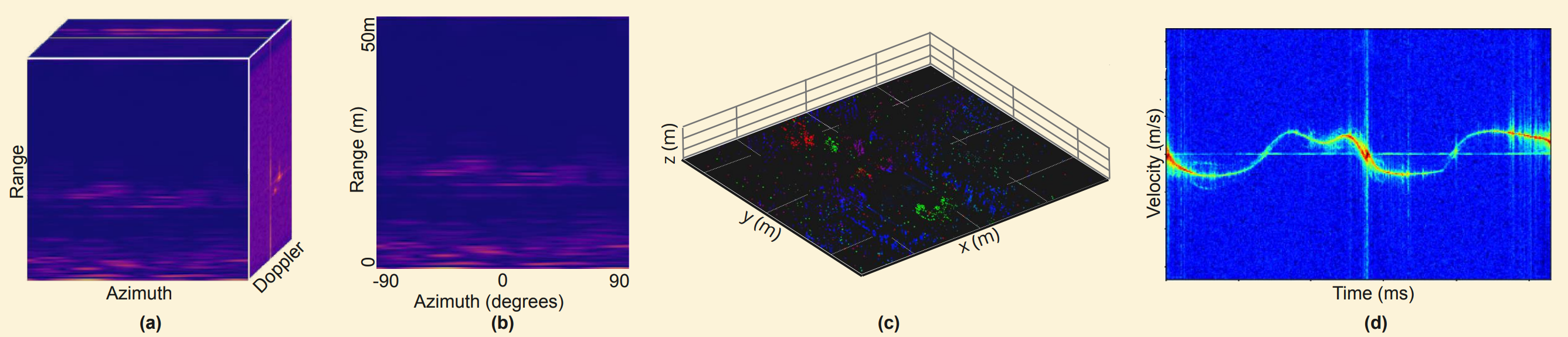

Among several common data formats, the radar point cloud (c) is the most popular format due to its compact information-rich data representation.

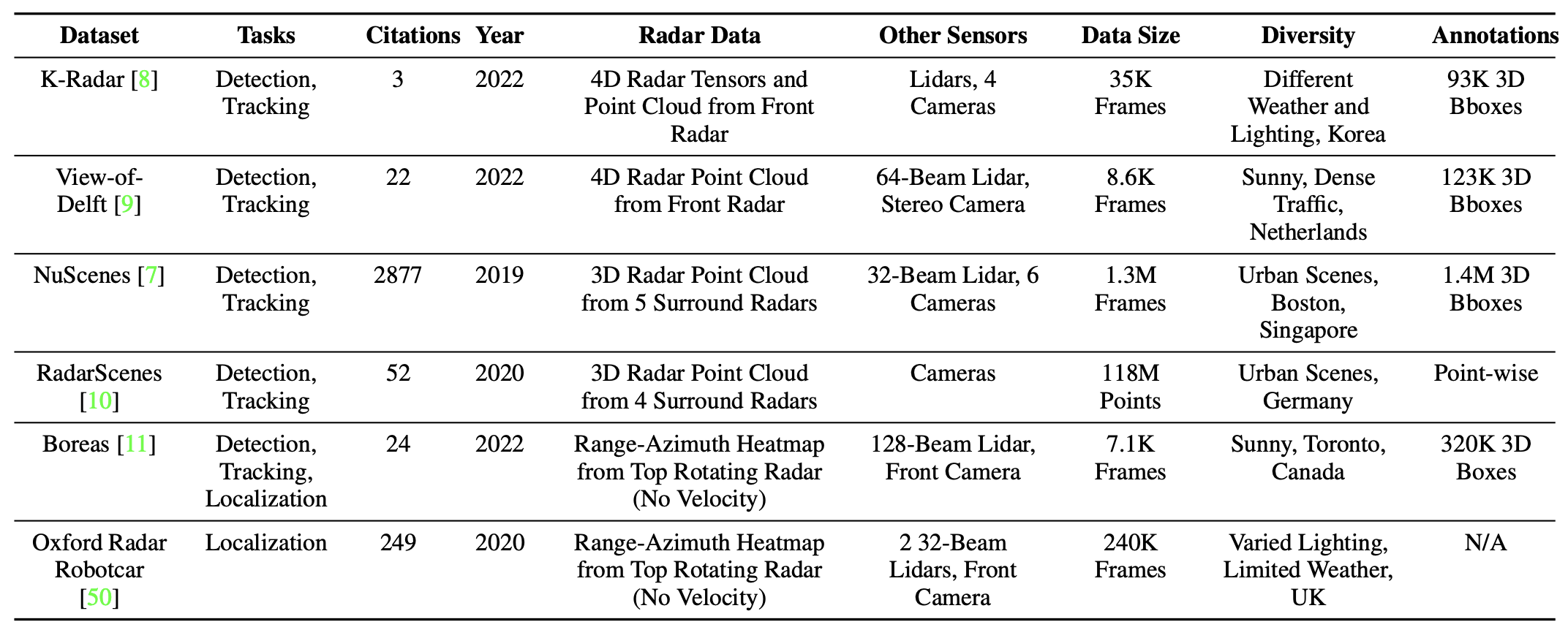

We provide a curated list of recent, high-quality radar datasets, focusing particularly on those that contain data from new-generation 4D radars.

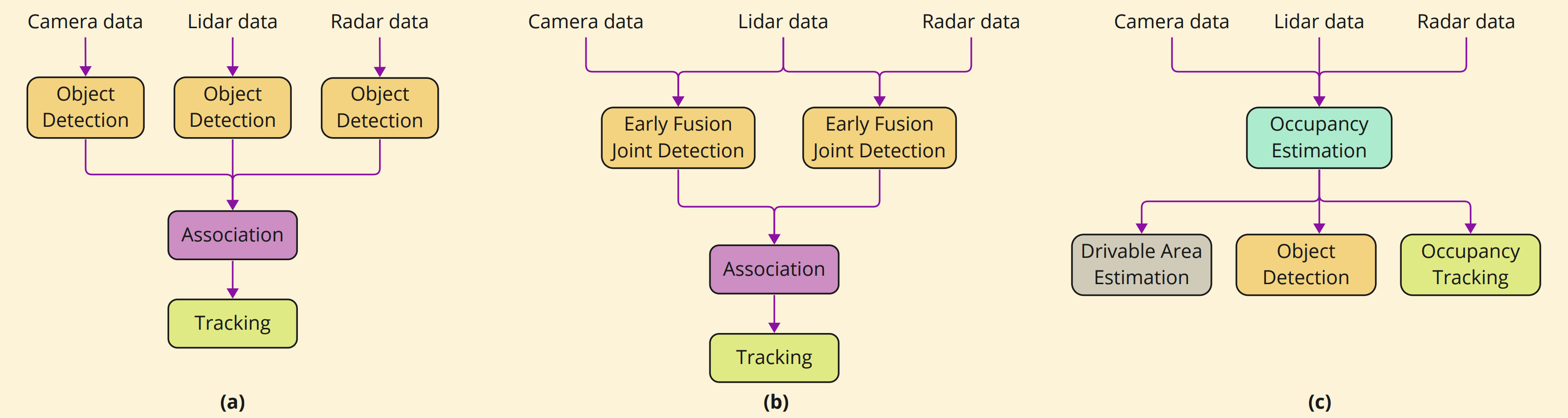

Radar models have struggled to make a difference to perception in traditional late fusion detection-based tracking approaches (a). Newer paradigms, such as early sensor fusion (b) and occupancy estimation (c), promise to significantly enhance radar’s contribution to perception.

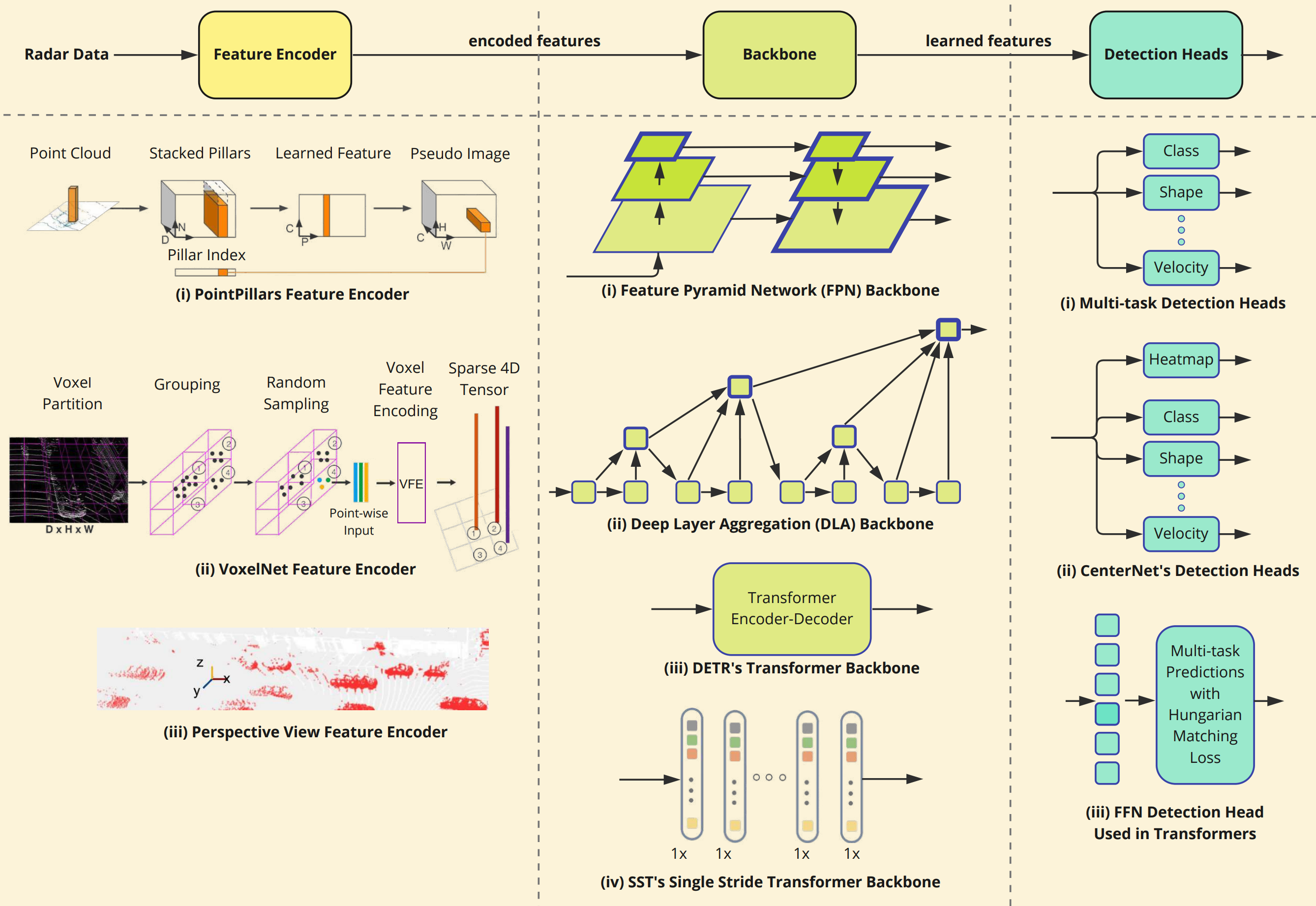

We provide a modular detector framework for radar deep learning models, where components from state-of-the-art models can be selectively incorporated at relevant stages with only minor modifications.

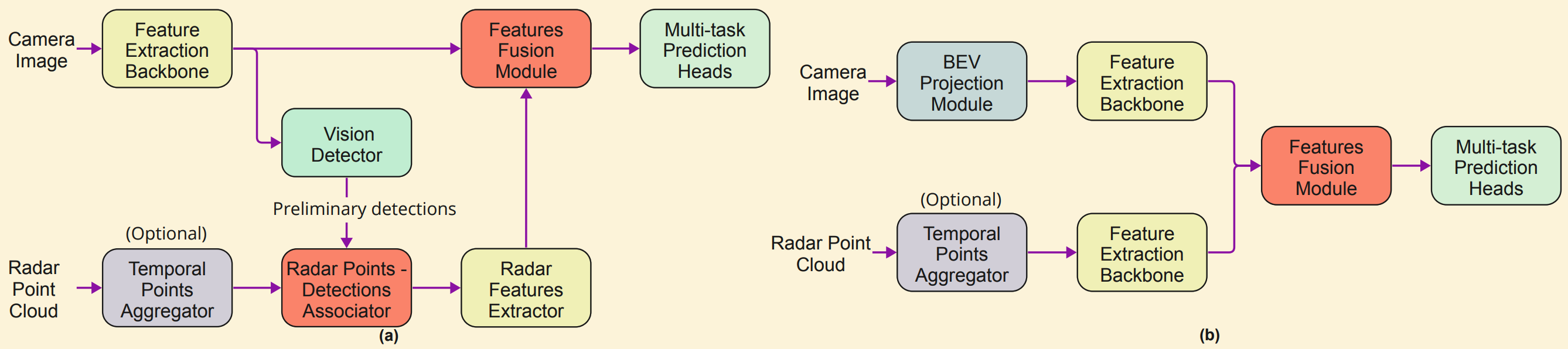

There are two popular approaches to camera-radar early fusion: (a) fusion in the perspective view, which improves depth estimation of camera detections and adds object velocity to those detections; and (b) fusion the bird’s-eye view (BEV), which infers joint detections from the BEV features of camera and radar.

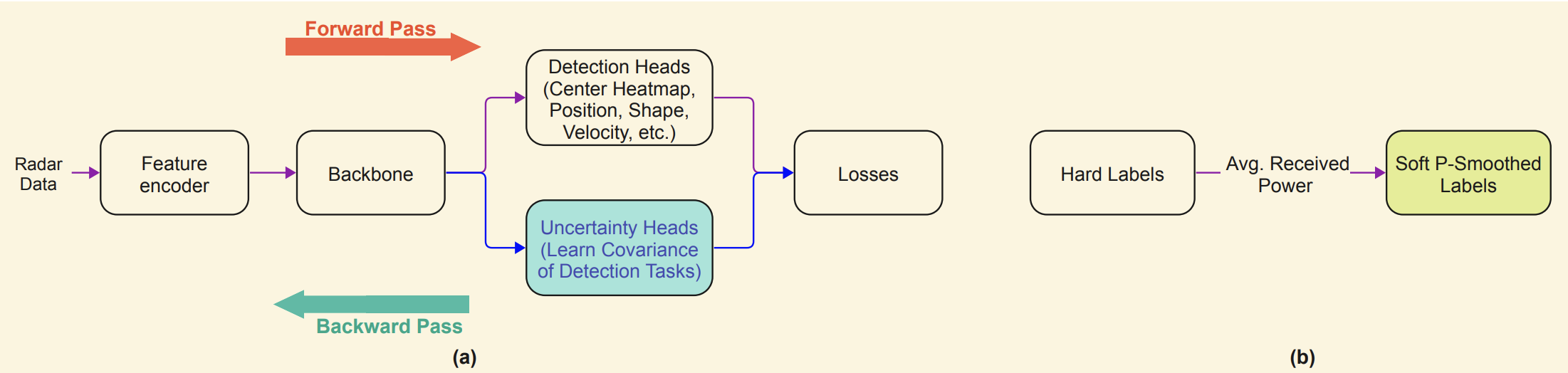

The high uncertainty in radar data results in less reliable detections by learned radar models. We discuss sources of uncertainty in data and deep learning techniques to improve robustness of detections.

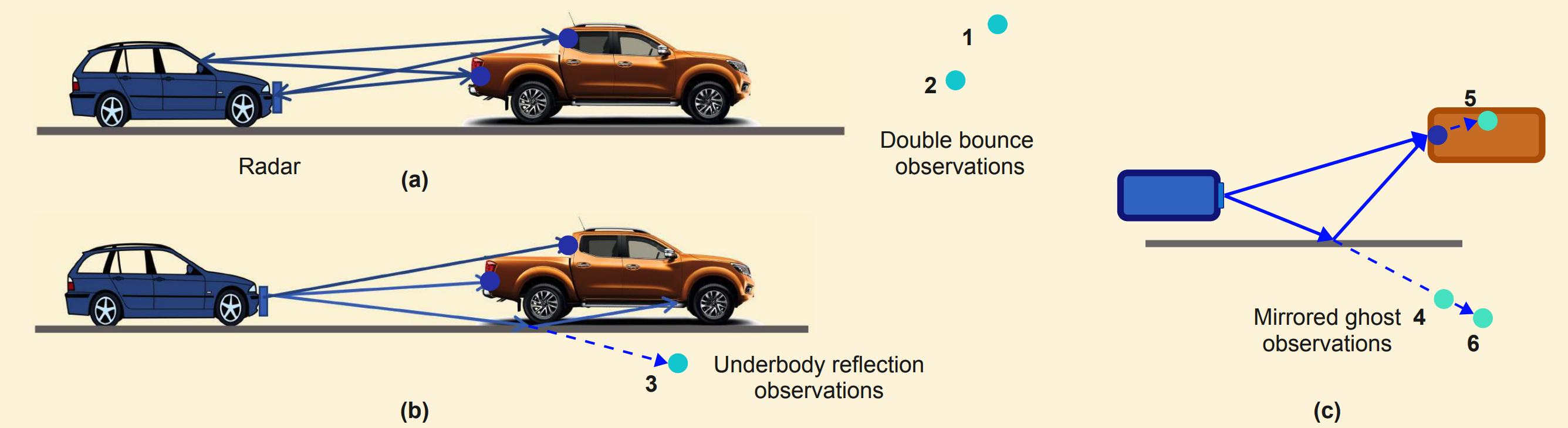

Radar data contains significant amount of clutter, leading to false positive detections. We identify three main sources of clutter and methods to remove such clutter.